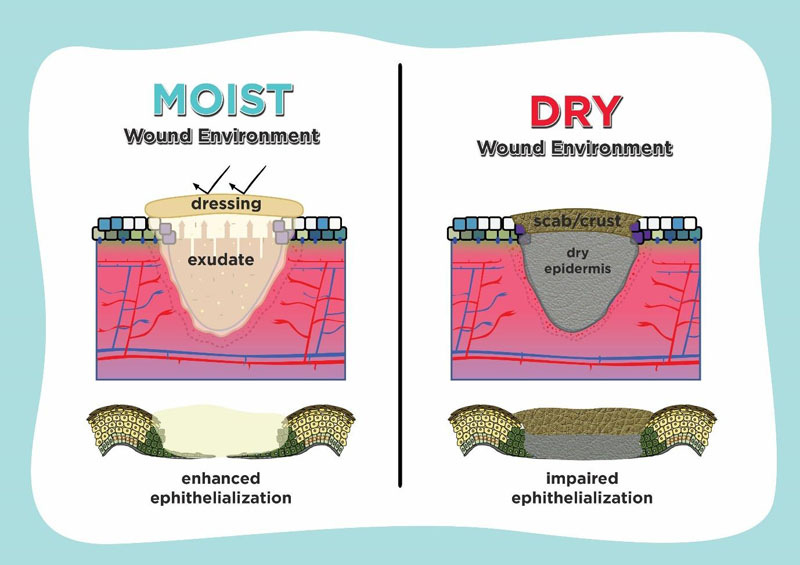

Have you ever had a wound that just wouldn't seem to heal properly? For me, it was a leg wound that lingered recently for two frustrating months. No matter what I tried, the wound kept drying out, cracking, and re-opening from the slightest movements or pressures. It was only when I found out that using petroleum jelly as a moisturizer and covering the wound that the healing process kicked into high gear. What had been an agonizingly slow recovery suddenly turned around in just 5-6 days with the wound nearly fully healed.

This experience turned out to be the perfect analogy for understanding the challenges and keys to successfully training LLMs to align with the desired objectives and behaviors.

You see, a wound requires very specific conditions to heal properly. It needs the right level of moisture, protection from irritation, and the space to activate its innate biological healing processes. Without those conditions being met, the wound keeps getting stuck in an unproductive cycle of re-injury and stalled recovery.

Aligning LLMs I believe is very similar if we treat them like biological organisms. These powerful AI models need the right "environmental conditions" in their training process to unfold their learning in the intended direction. Feed them the wrong data inputs, reward functions, or training processes, and you risk the model developing unwanted behaviors or outputs akin to a wound drying out and cracking apart.

Just as introducing petroleum jelly and a covering provided my leg wound with the moisture seal and protective layer to finally heal quickly, carefully curating the right training data, objectives, reward models, and AI alignment techniques will yield an ideal "learning environment" for large language models.

This allows their intrinsic capabilities to emerge productively and reliably aligns their development with specified principles like truth, safety and ethics. Wrong conditions lead to stalled progress or misalignment. But the right moisture and covering unleash healing, just as the right training regimen unleashes an LLM's potential.

Biological processes like wound healing rely on providing the ideal cellular environment. Training aligned AI systems is a similar endeavor – by controlling the data, algorithms, rewards and principles we employ in an LLM's training, we create the CONDITIONS for its intrinsic learning process to unfurl in the intended direction.

My leg wound taught me that sometimes the solution is not cutting-edge treatments or expensive therapies, but simply creating the proper environmental conditions for the innate biological intelligence to run its course productively. Perhaps the same humble lesson can guide us in the measured and meticulous work of developing safe and aligned artificial intelligence – not forcing it, but setting the stage for its learning process to blossom as intended.